Live signal stream from multiple sources — every agent event captured and typed.

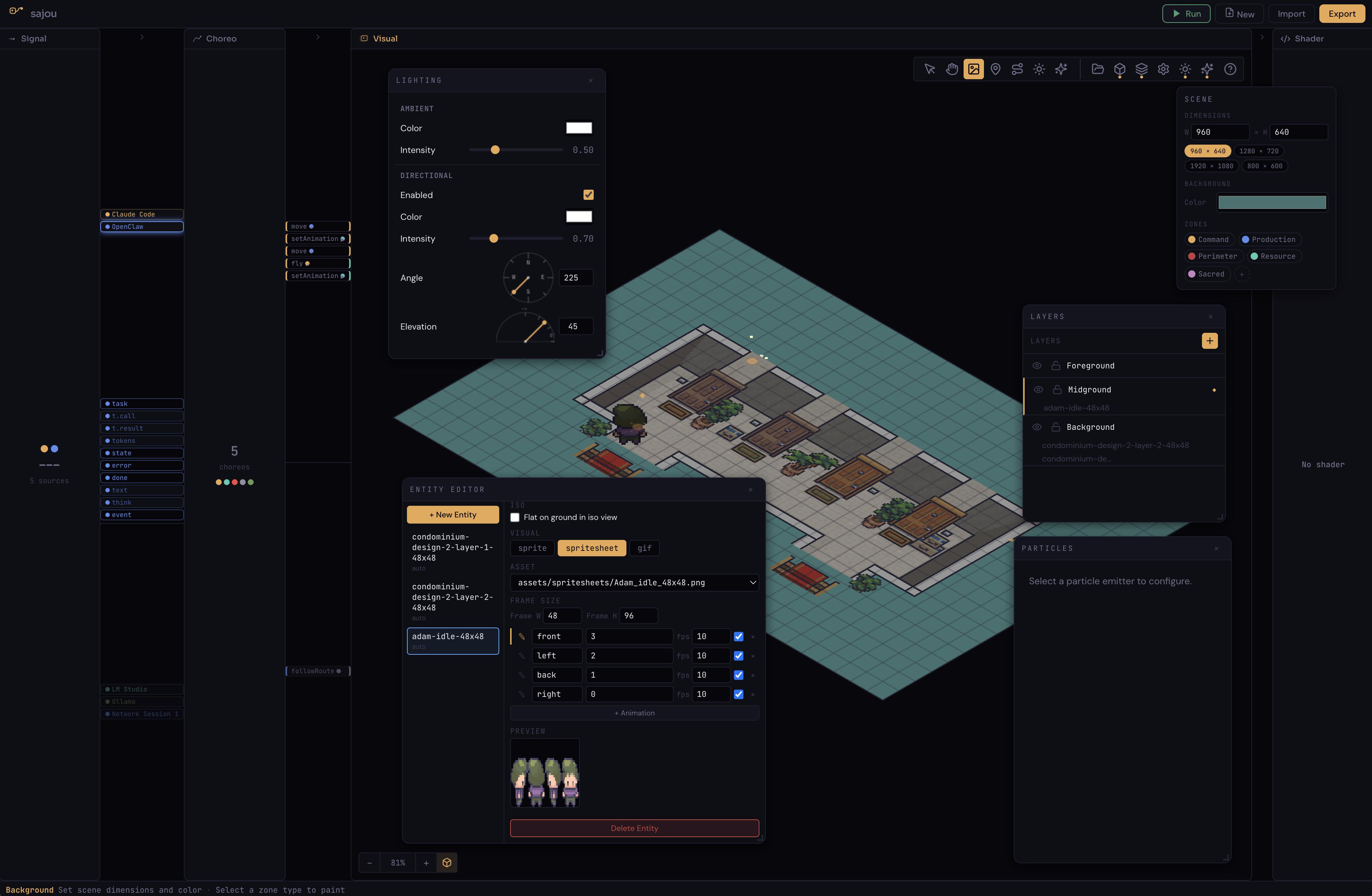

Visual stage — entities, lights, particles on a Three.js canvas.

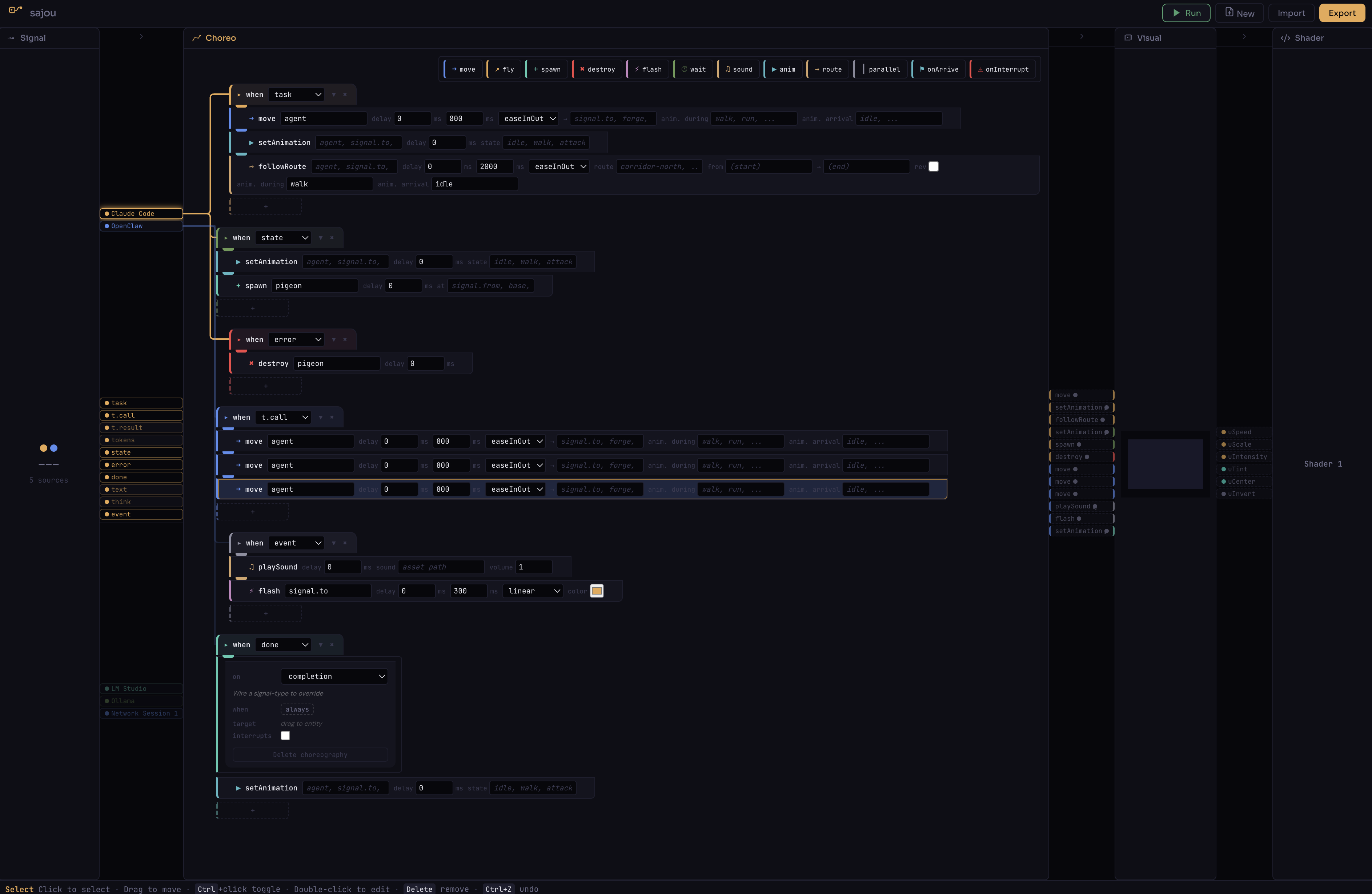

Declarative choreographies — drag, connect, compose.

The signals are the music. The themes are the dancers. sajou is the choreographer.

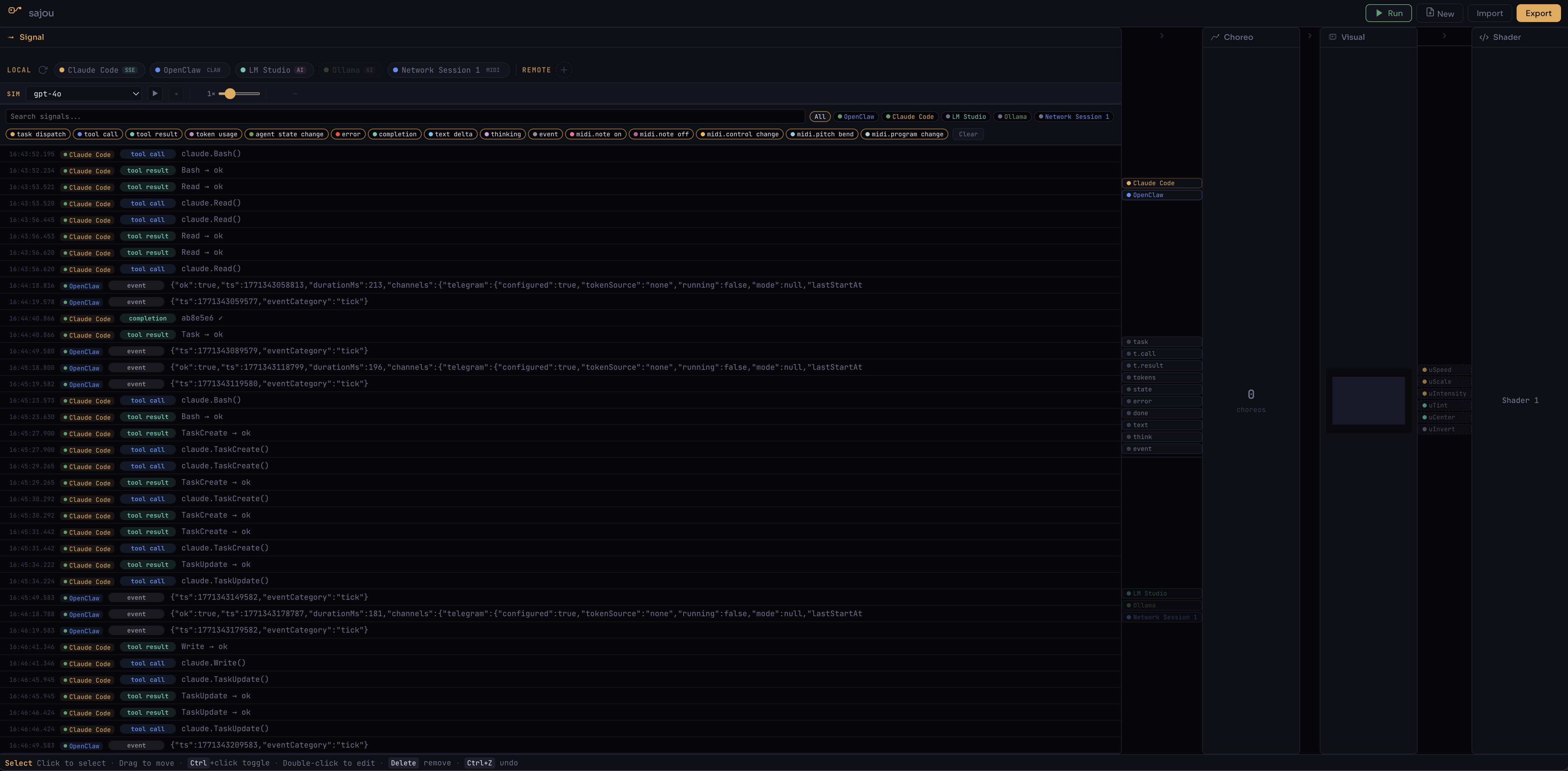

Raw data. Task dispatches, tool calls, errors, MIDI events. The pulse of your agents.

Declarative sequences. JSON that describes what happens visually. Composable by humans and AIs.

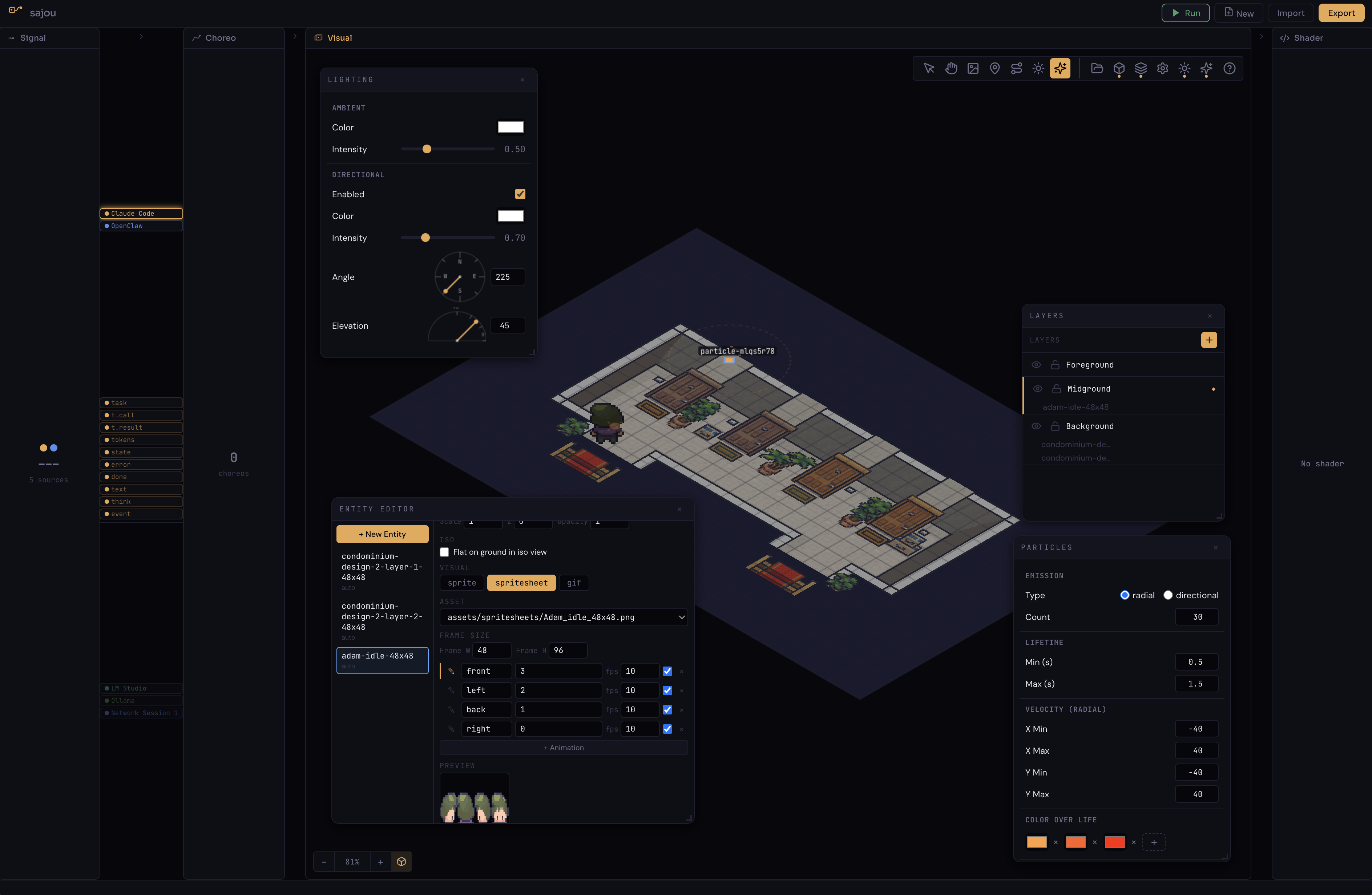

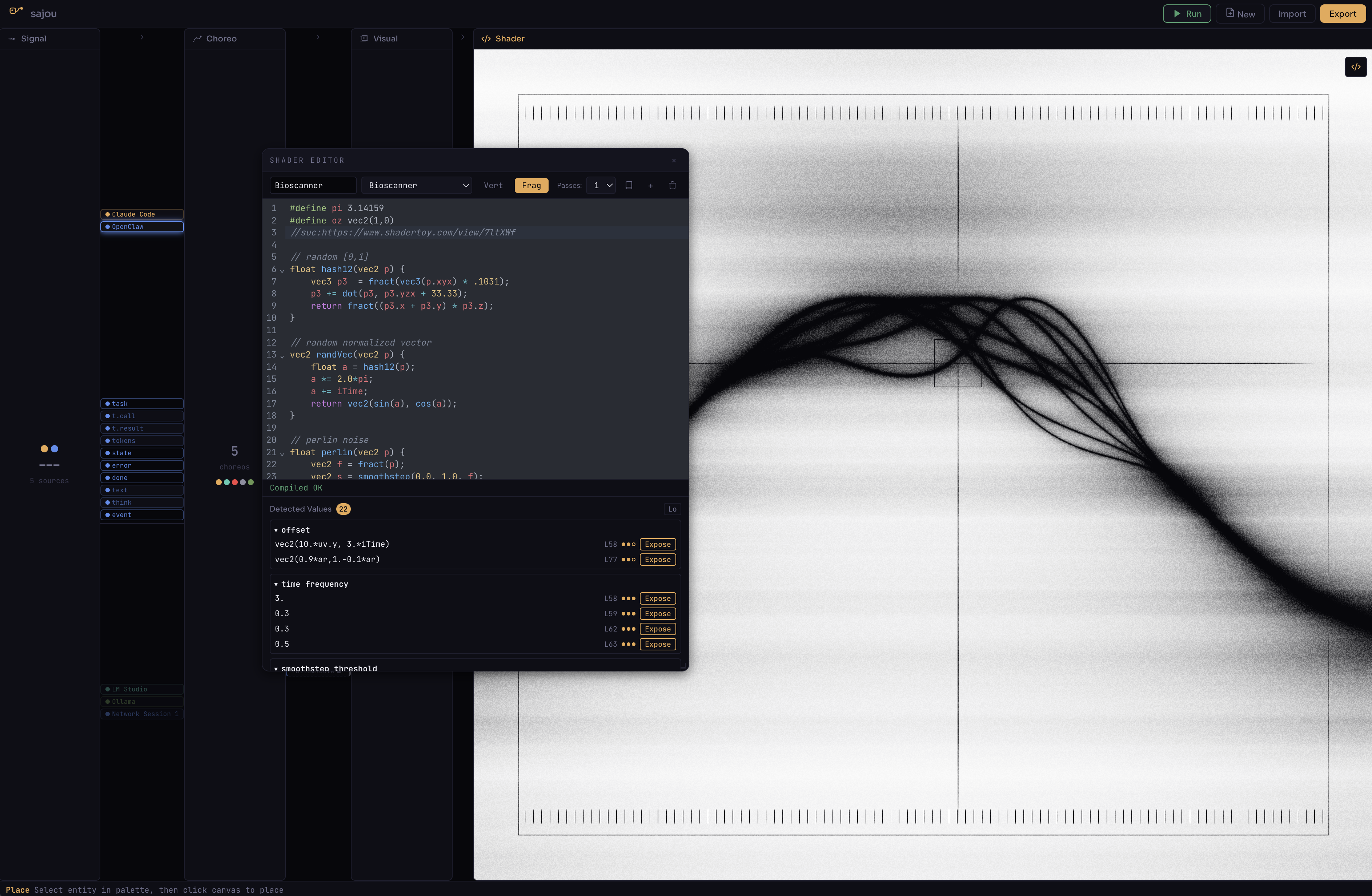

The render. Three.js, shaders, particles, lights. Same data, different scene, different experience.

Live signal stream from multiple sources — every agent event captured and typed.

Visual stage — entities, lights, particles on a Three.js canvas.

Declarative choreographies — drag, connect, compose.

Live signal stream from Claude Code

Choreography editor with wiring

Live shader editor — GLSL code, uniforms, real-time preview

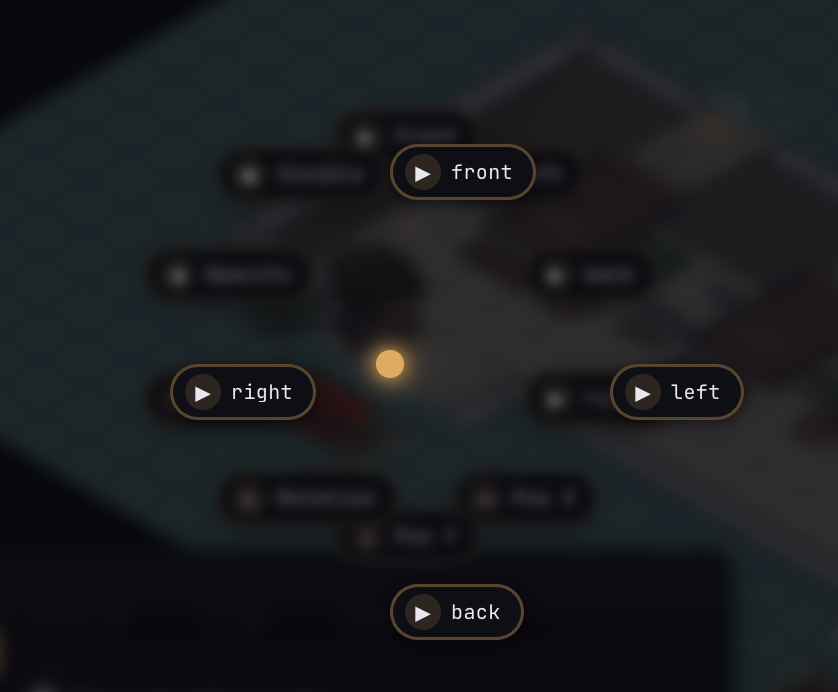

Radial entity controls

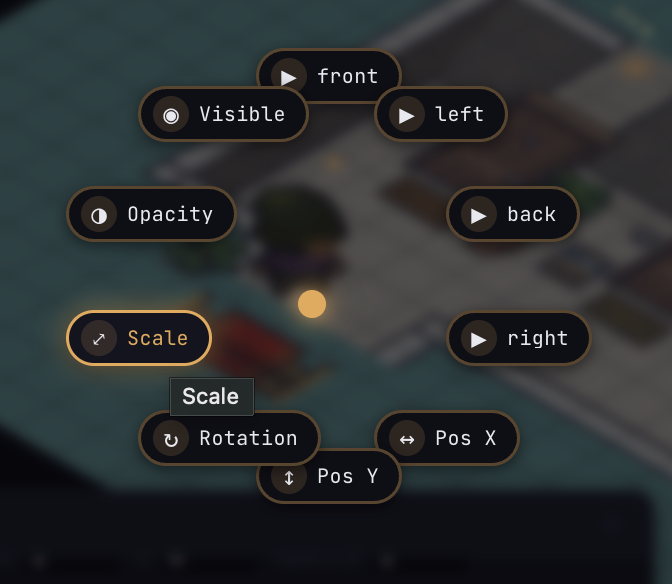

Transform properties

Noise field shader

Watch your AI agents work like watching a Starcraft match — not reading logs.

MIDI controllers, shaders, particles. sajou is a visual instrument.

Tell an AI: make me a My Little Pony theme. sajou plays it.